Automation in Linux: Built for Focus, Not Speed

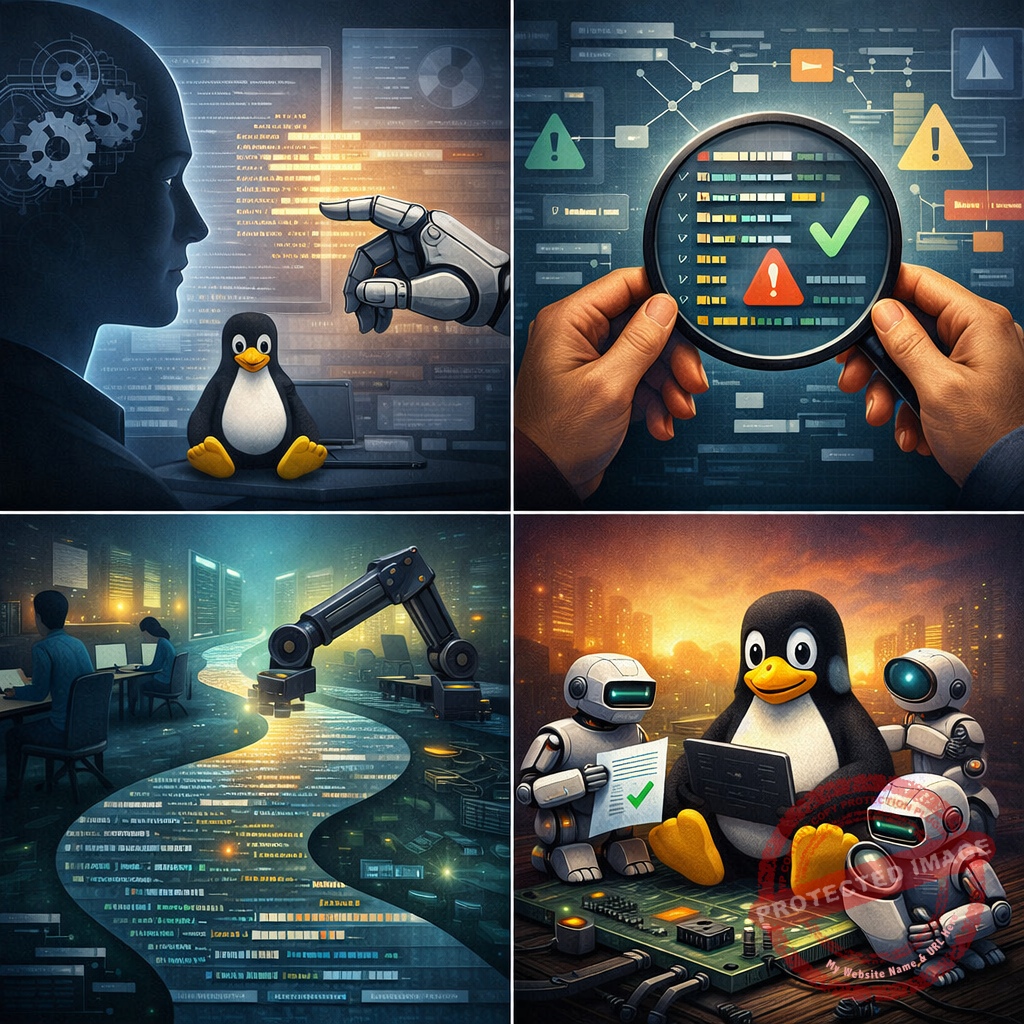

How Quiet Automation Entered Linux Kernel Development

For decades, Linux kernel development moved deliberately. Automation stayed at arm’s length. Artificial intelligence was avoided entirely. Merges happened slowly, diffs stayed simple, and tools rarely changed.

The real pressure did not come from speed. It came from fatigue. Messy code, tangled merges, and maintainers burning out under repetitive work slowly wore patience thin. Helpers stepped away. The system strained.

Change arrived quietly. No announcement. No revolution. Just gradual adoption of tools that did not decide, but helped reduce clutter. The goal was never replacement. It was clarity.

The Problem: Too Much Code, Too Little Signal

Every month, the Linux kernel absorbs an enormous number of patches. Over time, telling real improvements apart from rewrites, repeated work, or outdated styles became harder.

What slipped through the cracks was not effort, but attention. Humans had to review everything, even when patterns repeated themselves endlessly.

This is where systems stepped in. Not to judge quality, but to surface what mattered first.

These tools study past merges, reviewer comments, and accepted changes. When a new submission closely matches something approved before, it gets flagged for quicker human review. Approval remains human. Mental load drops.

Automating the Boring, Not the Important

One of the earliest wins came from handling mechanical checks.

Spacing, naming conventions, comment formats. These used to demand constant vigilance from maintainers. It was tiring work, and small mistakes slipped through.

Now, automated tools scan new patches and compare them to how the kernel already looks. The standards are not invented by committees. They emerge from actual usage across millions of lines written over decades.

If a naming pattern like bigSmallPart rarely appears in accepted code, the system notices. Since 2010, ninety-eight percent of similar cases were rejected. That history becomes guidance, not a rule.

No hard commands. Just patterns.

Predicting Merge Conflicts Before They Happen

Another quiet shift is conflict prediction.

Instead of waiting for problems to surface after merges, tools now warn maintainers when certain combinations of changes have historically caused trouble. If two subsystems tend to clash and both are touched in a new update, a signal appears.

Nothing is blocked automatically. Maintainers can ignore it. But last year alone, similar warnings preceded several rollbacks.

Before, this relied entirely on gut feeling. Now, experience is backed by data.

Matching Work to People Through Patterns

Linux development is scattered by nature. Hundreds of contributors work across time zones, skills, and specialties.

Some repeatedly fix ARM driver bugs. Others focus narrowly on file system locking. Work is uneven and constantly shifting.

Tools now help by spotting how people have worked in the past and quietly surfacing related tasks. Someone who solved many file lock issues may see the next one early, without being assigned.

No authority. No noise. Just relevance.

Quiet signals replace loud processes.

Identifying Outdated Tools Before They Break Things

Old tools linger longer than they should. Documentation stops updating. Usage slowly drops.

Software now tracks how often kernel components are actually touched. Week by week activity patterns emerge. When usage stays below a clear threshold, it gets flagged.

Nothing is removed automatically. A conversation starts.

Stability still comes first. But planned retirement avoids sudden failures. Old parts fade out by design, not accident.

Letting Real-World Energy Data Shape Decisions

Performance and power usage now influence kernel decisions more directly.

Data center operators share anonymized performance logs with researchers and kernel developers. Over time, clear trends appear. Some scheduling methods handle cloud-style workloads far better than others.

These are not lab simulations. They come from real machines under real load.

As a result, small changes in how processors rest and wake are now guided by what actually happens in production. Measurements matter more than guesses.

Reducing Harmful Communication Without Silencing Anyone

Long email threads in Linux development sometimes drift into personal attacks. Handling this used to fall entirely on humans.

Now, language-aware software scans messages before they are sent. It does not remove content. Messages that trigger aggression patterns are paused and reviewed manually.

The patterns are based on academic research, not opinions.

Discussion continues. Tempers cool first.

Why This Is Not About AI Taking Over

None of these tools replace Linus Torvalds or the maintainers around him. They exist to support focus, not accelerate output.

This is not automation built for speed. It is built to prevent tired minds from missing critical details. One rejected patch can protect the entire system.

Linux stays stable because restraint is enforced, not because progress is rushed.

What Actually Changed

No brain-like systems are writing kernel code. Generative tools are not pushing updates on their own.

Instead, small helpers sit quietly inside daily workflows. They smooth decisions, reduce repetition, and surface patterns humans already proved over time.

The surprise is not how far the tools could go. It is how carefully they are held back.

Control matters more than scale. How systems are run matters more than how fast they grow.

This was never a sudden shift. It was more like smoothing worn edges on familiar routines, just enough to carry them forward another decade.